For centuries, moral philosophers have debated whether moral decision-making should depend on the consequences of a decision (consequentialists) or on following moral rules or norms (deontologists; Gawronski & Beer, 2017). People intuitively rely on both consequentialist cost-benefit reasoning (CBR) and deontological moral rules (Greene, 2014). Moral dilemmas emerge when these perspectives are in conflict.

Moral learning occurs when a new experience changes how a person makes subsequent moral judgments and moral decisions. Moral learning may occur throughout the lifespan in various ways. For instance, children learn moral rules and norms by observing others’ behaviors (social learning; Langenhoff et al., 2022). Learning from the consequences of one’s own actions is another form of learning (Ho et al., 2017) which is less studied in the context of morality.

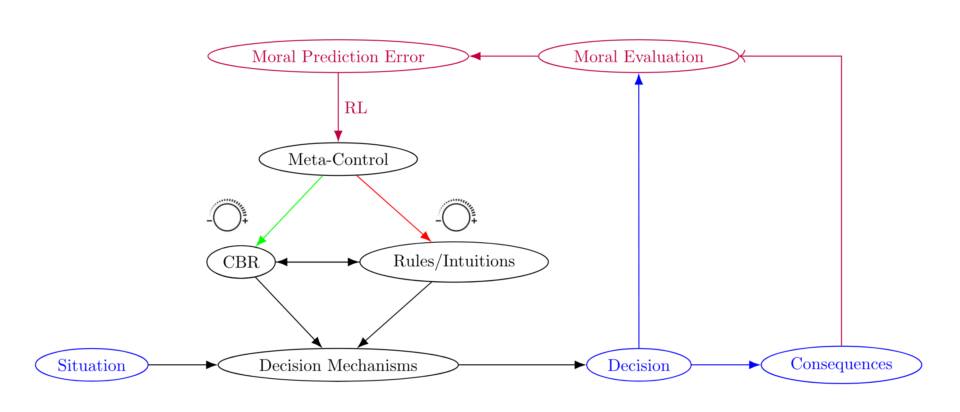

According to reinforcement learning (RL) theories of morality (Cushman, 2013; Crocket, 2013), people use two systems in moral decision-making:

- An automatic, intuitive model-free system evaluates actions by the average amount of (dis)value they produced in the past. Model-free system is often linked to intuitive and rule-based decision-making (Greene, 2014).

- A more computationally intensive model-based system, which builds a model of the world to reason about the potential consequences an action might have in a certain situation. This model-based system is often linked to cost-benefit reasoning (CBR).

Both of these mechanisms of moral decision-making have advantages and limitations. Model-free decision-making may lead to better outcomes in situations where it is difficult to construct an accurate model of the environment. However, its recommendations may be suboptimal in special situations where an action’s consequences are much worse or much better than they usually are (Green, 2017). Model-based decision-making compares the situation-specific anticipated consequences of different actions. However, they may sometimes lead to worse outcomes in real-world situations – where consequences are not always as expected (Gigerenzer, 2008; Railton, 2014). Because model-based and model-free decision-making fail in different sets of situations, wise moral decision-making requires adaptively choosing between model-free versus model-based mechanisms of morality.

In this project, we investigate whether and, if so, how people’s morality is shaped by moral learning from the consequences of past decisions. We are especially interested in how moral learning from the consequences of past decisions informs in which subsequent situations people rely on moral rules versus cost-benefit reasoning (metacognitive moral learning).

We use a novel experimental paradigm in which participants face a series of realistic moral dilemmas between two conflicting choices: one prescribed by a moral rule (typically ‘deontological’) and the other favored by cost-benefit reasoning (typically ‘utilitarian’). Participants observe the consequences of each decision before making the next one.

In recent experiments, we found systematic, experience-dependent changes in people’s moral decisions: participants adjusted how much they relied on moral rules versus cost-benefit reasoning according to which produced better consequences. This learning generalizes to novel decisions with real-world implications. Our findings suggest Individual differences in morality may be more malleable than previously thought (Maier, Cheung, & Lieder, 2024).